News

Cornelis Networks and Supermicro Deliver Rack Scale Solutions with Advanced Networking and Omni-Path Express

High-Performance Computing and Artificial Intelligence Training Need Fast and Linear Scaling for Distributed Applications.

Introduction

Combining industry-leading performance, scalability, and affordability, a Rack Scale Solution comprised of Supermicro BigTwin® SuperServer and Cornelis Networks Omni-Path Express (OPX) is a compelling choice for the Manufacturing, Life Sciences, Climate, and Energy High-Performance Computing (HPC) verticals.

Solution Highlights

Cornelis Networks provides open, intelligent, high-performance networking solutions designed to accelerate the world’s most demanding AI and HPC applications.

Supermicro delivers Rack Scale Solutions, which are thoroughly tested to customer requirements. Supermicro and Cornelis Networks used industry-leading, highly configurable BigTwin multi-node servers for these tests.

Target Markets

The following are use cases for HPC using Cornelis Networks and Supermicro BigTwin:

- Manufacturing: HPC and AI technologies enable manufacturers to simulate and model products and production processes at previously impossible scales. This allows manufacturers to design better products and optimize manufacturing processes. For example, in the automotive industry, manufacturers use HPC to simulate vehicle crashes and predict the behavior of materials under extreme conditions, which helps in designing safer and more durable vehicles.

- Bio and Life Sciences: HPC bio and life sciences workload centers on applications such as genomics, proteomics, pharmaceutical research, bioinformatics, drug discovery, bioanalytical portals, and agricultural research. Computational techniques include database searching, molecular modeling, and computational chemistry. These workloads appear in commercial, academic, and institutional research environments.

- Climate: HPC climate and weather workload centers on applications such as atmospheric modeling, meteorology, weather forecasting, and climate modeling to simulate, predict, and analyze weather and climate patterns to help develop strategies to guide the world’s changing weather and climate conditions.

- Energy: HPC energy/geosciences workload includes earth resources-related applications such as seismic analysis, oil services, reservoir modeling, mining, natural resource management, GIS, mapping, and pollution modeling. These applications are used in both institutional research and commercial enterprise.

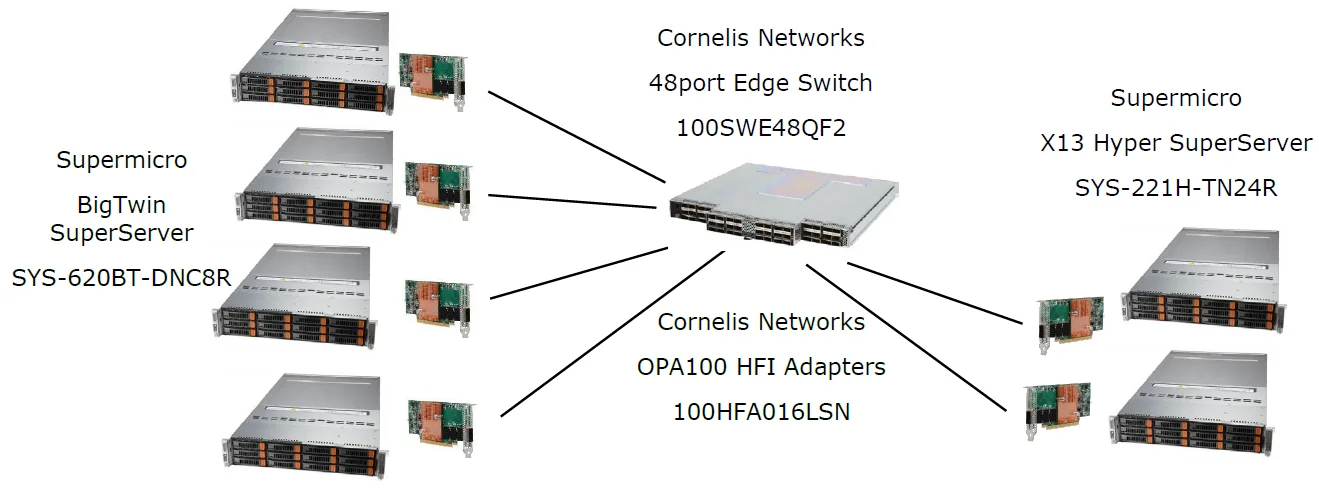

System Configuration Under Test

A test cluster was set up to run the OpenFOAM® application, a free, open-source solver. OpenFOAM has extensive features to solve problems from complex fluid flows involving chemical reactions, turbulence, and heat transfer to acoustics, solid mechanics, and electromagnetics. This test cluster consisted of the equipment shown in Figure 2 below and detailed in the Appendix.

Benchmarks Results

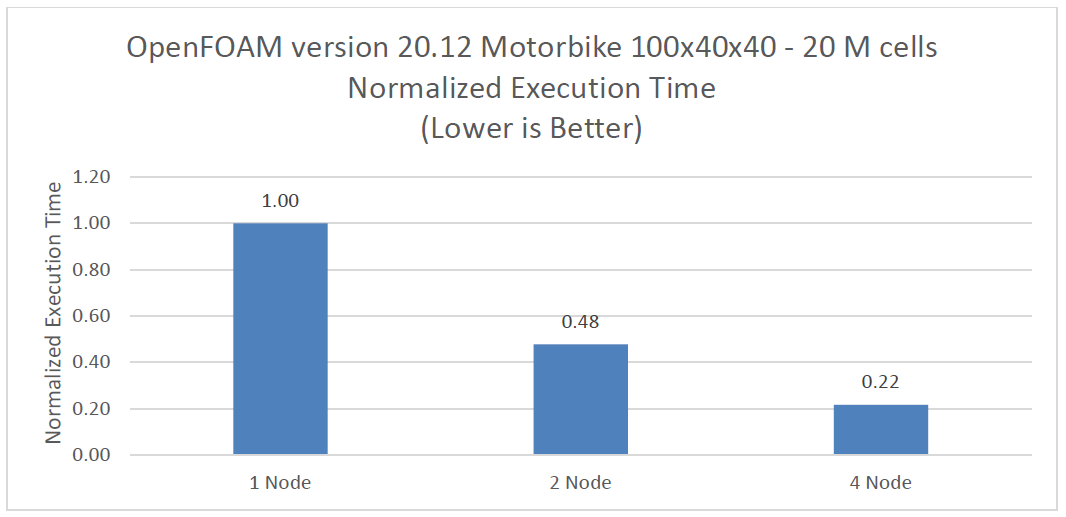

OpenFOAM

OpenFOAM is a suite of solvers, pre- and post-processing utilities developed to solve modelling and simulation problems in the domain of computational fluid dynamics (CFD). These simulations model a low speed (incompressible) air flow around a motorcycle and rider. A 20 million cell test case was used as the benchmark for this Supermicro Rack Scale 4-node test cluster. The benchmarking testing results demonstrated strong scaling, doubling the performance as nodes were doubled from 1 to 2 to 4. After BeeGFS storage was added to the cluster, the same OpenFOAM testing was rerun and demonstrated the same strong scaling with a complete Supermicro rack scale solution with storage. Note that superlinear scaling was observed, with the 4-node system running the application 4.5X faster on only 4 server nodes.

1 Node:

Time = 250

smoothSolver: Solving for Ux, Initial residual = 0.000629364, Final residual = 3.86023e-05, No Iterations 4

smoothSolver: Solving for Uy, Initial residual = 0.0118888, Final residual = 0.000761737, No Iterations 4

smoothSolver: Solving for Uz, Initial residual = 0.00764538, Final residual = 0.00047838, No Iterations 4

GAMG: Solving for p, Initial residual = 0.0678405, Final residual = 0.000453222, No Iterations 4

time step continuity errors : sum local = 3.93061e-05, global = -5.4744e-08, cumulative = 5.4418e-05

smoothSolver: Solving for omega, Initial residual = 9.51434e-05, Final residual = 9.10808e-06, No Iterations 3

bounding omega, min: -2164.24 max: 5.96083e+06 average: 1442.87

smoothSolver: Solving for k, Initial residual = 0.00277382, Final residual = 0.000150564, No Iterations 4

bounding k, min: -3.02232e-07 max: 77.2499 average: 0.849685

ExecutionTime = 1509.68 s ClockTime = 1518 s

2 Nodes:

Time = 250

smoothSolver: Solving for Ux, Initial residual = 0.000620451, Final residual = 3.85259e-05, No Iterations 4

smoothSolver: Solving for Uy, Initial residual = 0.0116966, Final residual = 0.000759766, No Iterations 4

smoothSolver: Solving for Uz, Initial residual = 0.00764462, Final residual = 0.00048455, No Iterations 4

GAMG: Solving for p, Initial residual = 0.0671181, Final residual = 0.000464396, No Iterations 4

time step continuity errors : sum local = 4.04058e-05, global = 1.25414e-07, cumulative = 4.74786e-05

smoothSolver: Solving for omega, Initial residual = 9.477e-05, Final residual = 9.05185e-06, No Iterations 3

bounding omega, min: -1815.64 max: 5.96082e+06 average: 1440.99

smoothSolver: Solving for k, Initial residual = 0.0027458, Final residual = 0.000150018, No Iterations 4

ExecutionTime = 722.14 s ClockTime = 729 s

4 Nodes:

Time = 250

smoothSolver: Solving for Ux, Initial residual = 0.000629982, Final residual = 4.00174e-05, No Iterations 4

smoothSolver: Solving for Uy, Initial residual = 0.0118336, Final residual = 0.000783449, No Iterations 4

smoothSolver: Solving for Uz, Initial residual = 0.00764686, Final residual = 0.000495655, No Iterations 4

GAMG: Solving for p, Initial residual = 0.0680713, Final residual = 0.000467714, No Iterations 4

time step continuity errors : sum local = 4.07382e-05, global = -1.64935e-08, cumulative = 3.00231e-05

smoothSolver: Solving for omega, Initial residual = 9.57868e-05, Final residual = 9.3438e-06, No Iterations 3

bounding omega, min: -2022.05 max: 5.96083e+06 average: 1441.18

smoothSolver: Solving for k, Initial residual = 0.00276423, Final residual = 0.000154907, No Iterations 4

bounding k, min: -1.46983e-05 max: 69.6017 average: 0.850025

ExecutionTime = 328.45 s ClockTime = 337 s

Conclusions

The results of the testing clearly demonstrate the combination of the Supermicro BigTwin SuperServer Rack Scale solution with Cornelis Networks OPX 100Gb interconnect is a highly efficient, readily available with minimal lead time, price performant solution for HPC Manufacturing, Life Sciences, Climate, and Energy verticals.

System Configurations

Compute Nodes: Two Supermicro BigTwin® SYS-620BT-DNC8R servers with dual-socket Intel® Xeon® Platinum 8352V @ 2.10GHz, 36-Core Processors, 128 GB DDR4 memory, 3200 MT/s, and single Cornelis Networks Omni-Path 100HFA016LSN Adapter. Two Supermicro BigTwin® SYS-620BT-DNC8R servers with dual-socket Intel® Xeon® Gold 6338N @ 2.20GHz, 32-Core Processors, 128 GB DDR4 memory, 2666 MT/s, and single Cornelis Networks Omni-Path 100HA016LSN Adapter.

Storage Nodes: Two Supermicro X13 Hyper SuperSever SYS-221H-TN24R with dual socket AMD EPYC 7543 32-Core processors, 256 GB DDR memory, 6x 1.6TB INTEL SSDPE2KE016T8, and single Cornelis Networks Omni-Path 100HFA016LSN Adapter.

Compute and Storage Software: Clients running RHEL 9.1; Storage nodes running Rocky Linux 9.2, Linux kernel 5.14.0-70.13.1el9. BeeGFS client service version 7.4.0, BeeGFS mgmtd, meta, and storage service version 7.4.0 running on all nodes.

Cornelis Networks Hardware: Six Omni-Path 100HFA016LSN PCIe Adapters, one Omni-Path 100SWE48QF2 48 port Edge Switch, and six Omni-Path 100CQQF2630 3-meter passive copper cables.

Cornelis Networks Software: Host: OPXS version 10.13.0.0.10. Switch: Firmware version 10.8.5. Application Software: OpenFOAM version 20.12, motorbike-100x40x40-20M cells. Open MPI 4.1.4 as packaged with OPXS version 10.13.0.0.10. Run-time flags: -mca mtl psm2 -mca btl self,vader.

For More Information:

Contact Cornelis Networks Sales: sales@cornelisnetworks.com.

About the Partners

Cornelis Networks is a technology leader delivering purpose-built, high-performance fabrics accelerating high-performance computing, high performance data analytics, and artificial intelligence workloads in the cloud and in the data center. The company’s products enable scientific, academic, governmental, and commercial customers to solve some of the world’s toughest challenges by efficiently focusing the computational power of many processing devices at scale on a single problem, simultaneously improving both result accuracy and time-to-solution for their most complex application workloads. For more information, visit: cornelisnetworks.com.

As a global leader in high performance, high efficiency server technology and innovation, Supermicro develops and provides end-to-end green computing solutions to the data center, cloud computing, enterprise IT, big data, high performance computing, and embedded markets. Supermicro’s Building Block Solutions® approach provides a broad range of SKUs and enables the building and delivery of application-optimized solutions based upon the customer’s requirements. For more information: www.supermicro.com.