Inside the Guts of AI Networking: Building a Fabric That Thinks

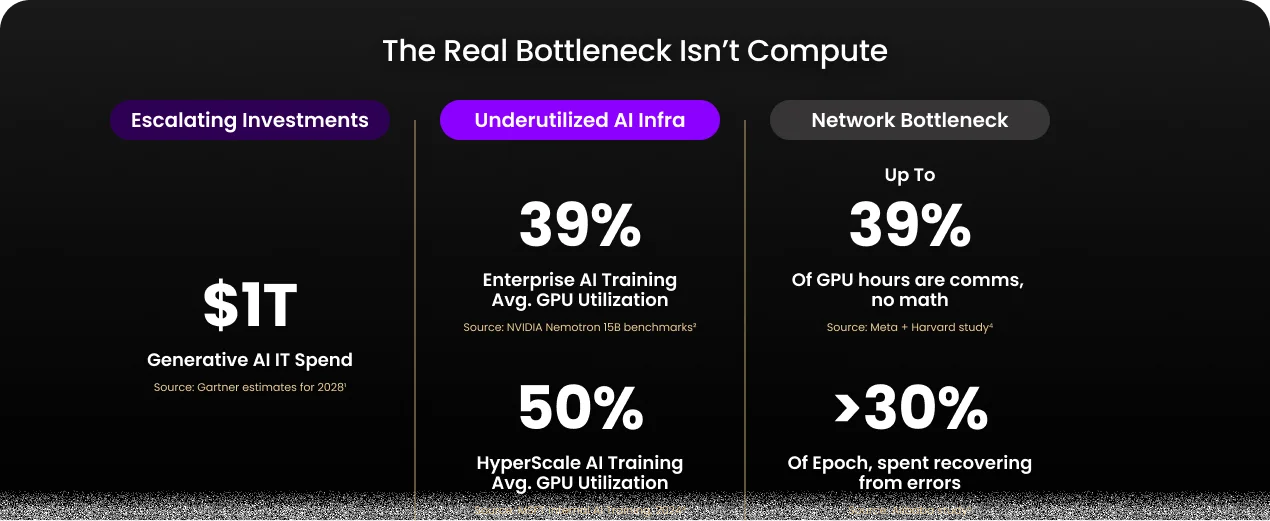

Every major enterprise and cloud provider is racing to scale AI infrastructure. Gartner® projects over $1 trillion1 in annual AI IT spend by 2028—a staggering figure that reflects the world’s obsession with compute power. Yet beneath the headlines and GPU/XPU arms race lies a quiet, expensive truth: most of that compute isn’t being used efficiently.

According to benchmark studies, enterprise AI training environments average just 39 percent GPU utilization2, while even HyperScalers—the most technically sophisticated organizations on the planet—hover around 50 percent3. Half of the world’s most advanced AI hardware sits idle.

The Real Bottleneck Isn’t Compute — It’s the Network

Why does so much of that investment go underutilized? Despite the industry’s focus on faster chips, and denser nodes, the true bottleneck lies in the network fabric that ties them together.

Studies from Meta®+ Harvard® and Alibaba® reveal that:

Up to 32 percent4 of GPU hours are consumed by inter-node communication—not computation.

Roughly 30 percent5 of each training epoch is spent recovering from errors, including network link errors.

In other words, GPUs aren’t running out of math—they’re running out of messages.

It’s not that today’s networks are slow. We already have 400 Gb/s and 800 Gb/s links, mature low-latency protocols like InfiniBand and RoCEv2, and record-breaking HBMs. The problem isn’t speed—it’s efficiency. Congestion, packet loss, and non-deterministic routing continue to plague large-scale AI training clusters, especially beyond the few-hundred-GPUs threshold.

Why network speed alone doesn’t solve scale

The networking industry has done its part on the glamour metrics—bandwidth, link rate, line encoding, SerDes speed. But as clusters scale past a few hundred GPUs, the “guts” of the network begin to break down.

At that scale, pause frames, head-of-line blocking, and cascading congestion become chronic. Packets are dropped and replayed. Latency tails stretch into milliseconds. The collective operations that underpin AI training—AllReduce, AllGather, Broadcast—stall, causing global slowdowns across the fabric.

No matter how fast your scale out network links are, if the “guts” of the network—flow control and routing logic can’t keep up with data dependency and synchronization patterns in distributed training, performance collapses.

Rethinking the Guts of AI Networking

That’s where the Cornelis® Omni-Path® architecture enters the story. It’s built from the ground up to solve the invisible inefficiencies throttling AI performance. Its approach centers on lossless, congestion-free transmission with architectural features designed for deterministic performance under load, much like what the industry is racing to build with Ultra Ethernet—but available now.

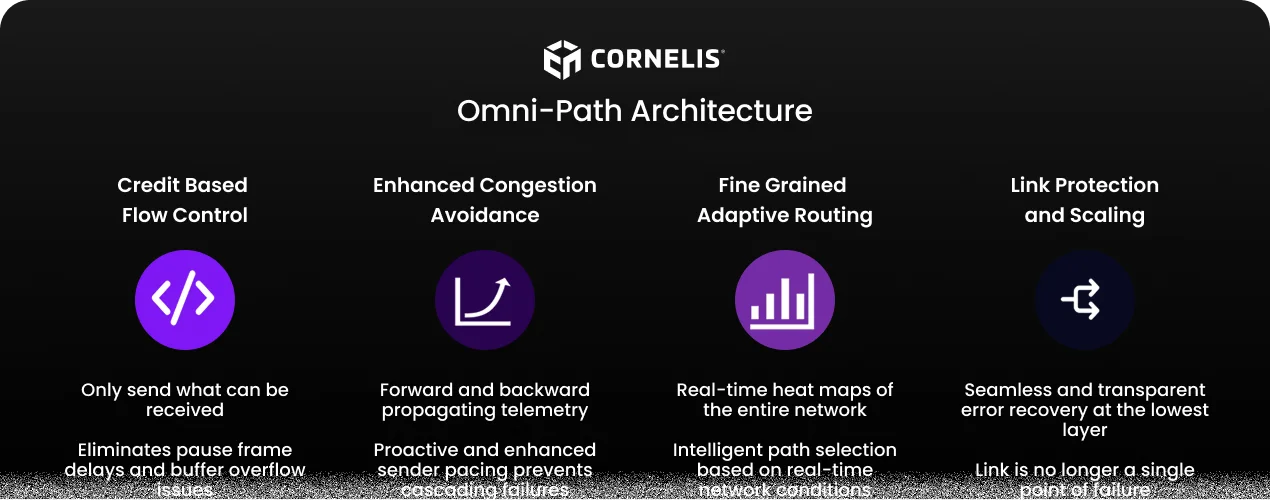

Key architectural pillars of Omni-Path include:

Credit-Based Flow Control

Ensures packets are sent only when receive buffers have available credits—preventing overrun and eliminating pause frames. Credit based flow control allows per-virtual-lane flow regulation, enabling fair allocation across competing workloads.

Enhanced Congestion Avoidance

Utilizes forward and backward-propagating telemetry to dynamically adjust pacing at the source. This avoids the classic cascading congestion seen in oversubscribed topologies and maintains consistent throughput even under hot-spot pressure.

Fine-Grained Adaptive Routing

Real-time telemetry builds a global heatmap of switch buffer utilization, enabling path selection based on current network state rather than static tables. It automatically bypasses congested paths, reducing long-tail latency and improving collective efficiency.

Dynamic Lane Scaling & Link-Level Replay

Each link can degrade gracefully if individual lanes fail, maintaining connectivity instead of triggering a full path teardown. Link-level replay catches forward-error-correction (FEC) misses at the hardware layer, eliminating costly end-to-end retransmissions.

Together, these techniques make Cornelis Omni-Path a “decisive”, “active” and “aware” aka a thinking network vs. a traditional best-effort network (Ethernet or Infiniband) —one that keeps GPUs doing what they do best: compute.

What this means in practice aka Business Outcomes

The payoff is substantial. Networks built on the Omni-Path architecture have demonstrated:

~35 percent lower latency6 than leading industry alternatives.

Ability to scale to 500,000 endpoints6 that will power the AGI era.

Faster collectives and All-Reduce operations, shortening training cycles and delivering faster time-to-insight and time-to-revenue.

Put simply, more tokens per dollar, per watt, per second.

Open, Interoperable, and Ready for the AI Era

What makes this particularly notable is that Cornelis has a roadmap to layering these innovations onto Ultra Ethernet standards (UEC), combining breakthrough network efficiency with the interoperability and supply-chain flexibility that data centers demand. The result: soon customers can deploy SuperNICs, top-of-rack, and director-class switches from Cornelis—or mix and match within the broader Ethernet ecosystem.

The Network AI Has Been Waiting For

AI infrastructure has advanced at a breathtaking pace. Compute accelerators, model architectures, and memory/storage systems have all evolved—but the network has lagged behind. It’s time to close that gap.

By redesigning the “guts” of the network—flow control, routing, and recovery mechanisms—Cornelis Omni-Path is unlocking the full potential of AI clusters.

The trillion-dollar AI era deserves a network worthy of it.

And it’s finally here. It thinks and not stinks of green.

- https://www.gartner.com/en/webinar/676097/1510999

- https://github.com/NVIDIA/dgxc-benchmarking

- https://www.microsoft.com/en-us/research/publication/an-empirical-study-on-low-gpu-utilization-of-deep-learning-jobs/

- https://arxiv.org/pdf/2310.02784

- https://arxiv.org/html/2406.04594v2

- Cornelis Internal estimates/benchmarks