NASA's Global Modeling and Assimilation Office Helps Keep NASA Flying

Discover cluster with 6.7 petaFLOPS peak capacity will enable high resolution weather predictions and climate simulation and modeling.

Discover Highlights

Existing multiple Scalable Compute Units comprising over 103,000 cores

New upgrade to include 640 Supermicro Fat Twin dual-socket nodes with Intel® Xeon® Gold 6148 processors

Intel® Omni-Path Architecture fabric

Over 6 petaFLOPS post-upgrade peak computing capacity1

Executive Summary

NASA's Global Modeling and Assimilation Office (GMAO) is located at the Goddard Space Flight Center in Greenbelt, Maryland. The office’s mission is to "maximize the impact of satellite observations on analyses and predictions of the atmosphere, ocean, land, and cryosphere."

One of the GMAO’s activities is to use the data from over 5,000,000 observations acquired every six hours to predict weather and model climate on a global scale, sometimes with resolutions as fine as 1.5 km2. The global surface area of Earth is 510,072,000 km2 (196,939,900.212 mi2) with water covering 70.8 percent and land taking up 29.2 percent.

Predicting weather and modeling climate at these scales takes a lot of computing power provided by NASA Goddard’s Computational and Information Sciences and Technology Office (CISTO).

Challenge

"We support a variety of NASA's missions and field campaigns with weather predictions and other atmospheric and climate research analyses, some of which are consumed by other organizations," explained Dr. Bill Putman, a GMAO researcher.

GMAO products include a wide range of data, such as:

Short-range (days to a week) numerical weather predictions to support activities, like satellite missions and NASA research aircraft taking a certain flight path to capture samples for aerosol or chemical studies for NASA field campaigns

Longer-range, seasonal predictions (weeks to months), often in collaboration with other agencies, such as the National Oceanographic and Atmospheric Administration (NOAA)

Other large collaborative efforts with national and international organizations

Re-analyses of very long-term (years to decades) climate and atmospheric conditions, publicizing data used for global climate baselines that might be consumed by other researchers.

"There are only a few centers around the world," added Putman, "that produce these types of high-quality re-analyses. They become very reputable data sets and useful for services around the nation and the world." GMAO's short-range weather predictions are based on NASA's Goddard Earth Observing System (GEOS) model that is run four times a day. The analysis integrates over 5,000,000 observations every six hours from satellites, weather balloons, and sensors deployed on land, in aircraft, on ships, and on ocean buoys around the world.

Longer-term predictions are based on seasonal ensemble predictions that cover a variety of possible outcomes over weeks to months. Additionally, GMAO supports computational research and development that often lead to new data products or advanced production systems later.

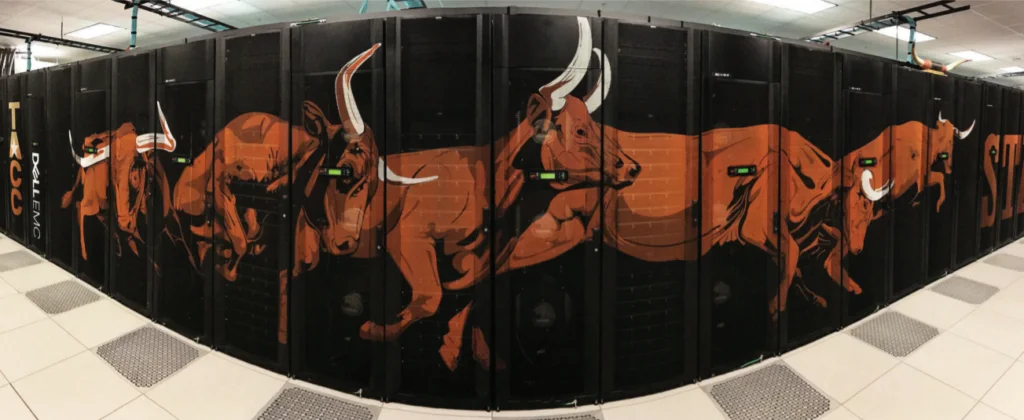

To support the level of computing that GMAO needs, the NASA Center for Climate Simulation (NCCS) in the Computational and Information Sciences and Technology Office (CISTO) deploys large capability supercomputing clusters. Their current system, called Discover, is an evolution over several years of scaling out systems to accommodate GMAO’s (and other department’s) computational demands

AMD is proud to have supported this vital research being done by the team at LLNL with Mammoth in conjunction with our technology partners at Cornelis Networks and Supermicro. Robert Hormuth CVP Architecture & Strategy, Data Center & Embedded Solutions Group AMD

Solution

"Every year we upgrade a portion of the cluster," stated Dan Duffy, CISTO Chief. "Our current cluster of 103,000 cores delivers over 5 petaFLOPS of peak capacity. It supports GMAO, the Goddard Institute for Space Studies (GISS), multiple field campaigns, and other NASA science research. With the newest Intel upgrade, we will have over 129,000 cores at a peak capacity of 6.7 petaFLOPS."

Discover is an Intel® processor-based supercomputing cluster built from several Scalable Compute Units (SCUs). It is designed for fine-scale, high-fidelity atmosphere and ocean simulations that span from days to decades and centuries, depending on the application (weather prediction, atmospheric and climate modeling, ensemble forecasts, etc.).

NASA purchased many Scalable Compute Units over the years from different vendors, including Dell, IBM, and SGI. The latest SCU consists of 640 Supermicro FatTwin* nodes with two Intel® Xeon® Gold 6148 processors per node interconnected by Intel® Omni-Path Architecture (Intel® OPA) fabric. The newest SCU delivers 1.86 petaFLOPS of additional peak computing capacity.

According to Duffy, Discover is partitioned into three computing islands, with a maximum of about 46,000 cores available to any one workload after the most recent upgrade.

Result

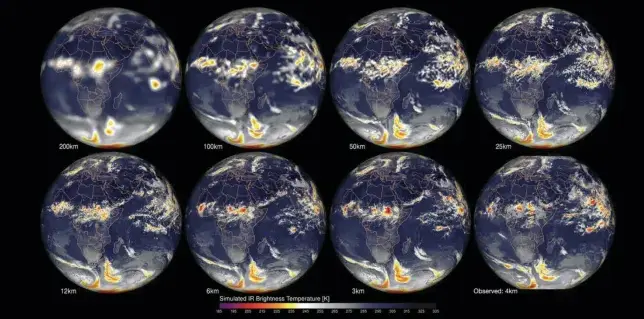

Today, global short-term prediction models that are run every six hours cover the globe with a resolution of 12 km2. That requires computations for 42,506,000 grid cells with inputs of temperature, wind speed, moisture, and pressure, plus integrating estimations of other parameters with much tighter resolutions, such as cloud processes, diabatic heating, aerosols, and others. These are computations GMAO could not have done in the past.

"The advances in computing technology have allowed us to scale from 50-km2 grid spacing 20 years ago with a single weather prediction per day to today's 12-km2 spacing with predictions done every six hours," commented Putman. "We went from a three-dimensional variational data assimilation system to a four-dimensional system that spreads the information from observations more consistently over the six-hour assimilation window."

Upgrades to Discover have improved other capabilities of GMAO's calculations.

"They've also been able to run forecasts and models with up to ten times that resolution," added Duffy. "Using one of the SCUs with over 30,000 Intel processor cores, they ran a global prediction at 1.5 km2, which was the highest-resolution global atmosphere simulation run by a US model at that time."

According to Putman, with each new cluster they gain capability to not only run finer resolutions, but to experiment with their models to understand how they can improve their production products. For example, the very fine 1.5-km2 research model turned into a much longer-term prediction capability with 3-km2 grid spacing now offered as a research product.

GMAO's research is used by many different groups throughout NASA. For example, during the planning of the Orbiting Carbon Observatory 2 (OCO-2) between 2012 and its launch in 2014, Putman's team ran a 7-km2 simulation of several atmospheric aerosols and chemicals that included carbon monoxide (CO) and carbon dioxide (CO2) on the Discover cluster. The OCO-2 group used the data from that simulation to further refine their project plans and implementation for measuring carbon in Earth's atmosphere. The OCO-2 satellite continues to provide data to NASA and the scientific community today, with an OCO-3 instrument launched in May 2019.

"From that simulation," added Putman, "we ran a shorterperiod simulation that included a full reactive chemistry. More than just the transport of aerosols and gaseous species, we looked at full interactions of over 200 chemical species in the atmosphere, such as surface ozone concentrations. That research was a precursor to a production composition forecast product of five-day air quality forecasts offered today."

With each new evolution of the Discover cluster, new capabilities are introduced, according to Duffy. For GMAO, programmers are developing Artificial Intelligence (AI) algorithms that can make their expensive simulation runs, such as long-term simulations of chemistry interactions, more efficient.

Solution Summary

The weather prediction and climate simulation workloads run by GMAO are hugely compute-intensive and can only be run on very large capability clusters, such as Discover at NASA’s NCCS. As NASA continues with yearly upgrades to Discover with new Scalable Compute Units, scientists such as Bill Putman can provide more refined predictions and richer simulations. The latest addition to Discover is a 25,600-core cluster of Intel Xeon Scalable Processors and Intel Omni-Path Architecture fabric. It enables GMAO to further their mission to support NASA’s—and other agencies and researchers— ongoing work around—and over—the globe.

Where to get more information

Where to Get More Information Find out more about Intel® Xeon® Scalable Processor family at https://www.intel.com/content/www/us/en/products/processors/xeon/scalable.html.

Learn more about Intel® Omni-Path Architecture fabric at https://www.intel.com/content/www/us/en/high-performance-computing-fabrics/omni-path-driving-exascalecomputing.html.

Find out more about the Goddard Space Flight Center at https://www.nasa.gov/goddard; learn about the GMAO at https://gmao.gsfc.nasa.gov/; and read about the Discover cluster at https://www.nccs.nasa.gov/systems/discover.

Solution Ingredients

Multiple Scalable Units comprising 108,000 cores

New upgrade to include 640 Supermicro Fat Twin dualsocket nodes with Intel® Xeon® Gold 6148 processors

Intel® Omni-Path Architecture fabric

Over 6 petaFLOPS peak computing capacity