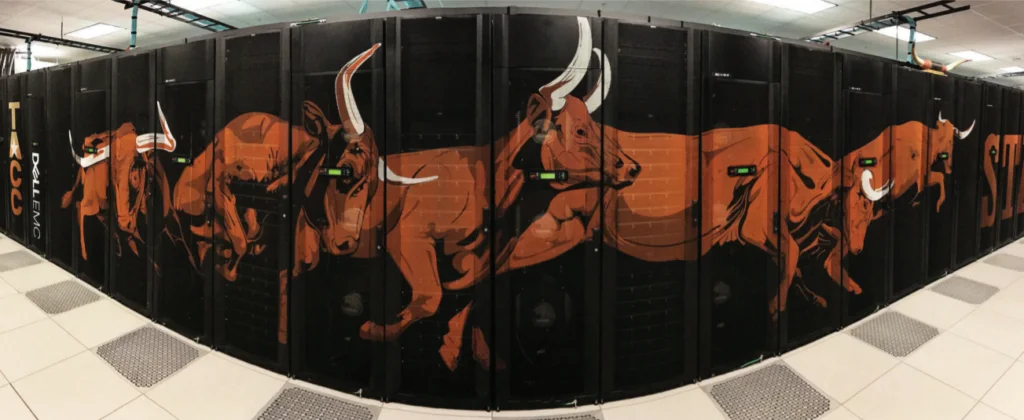

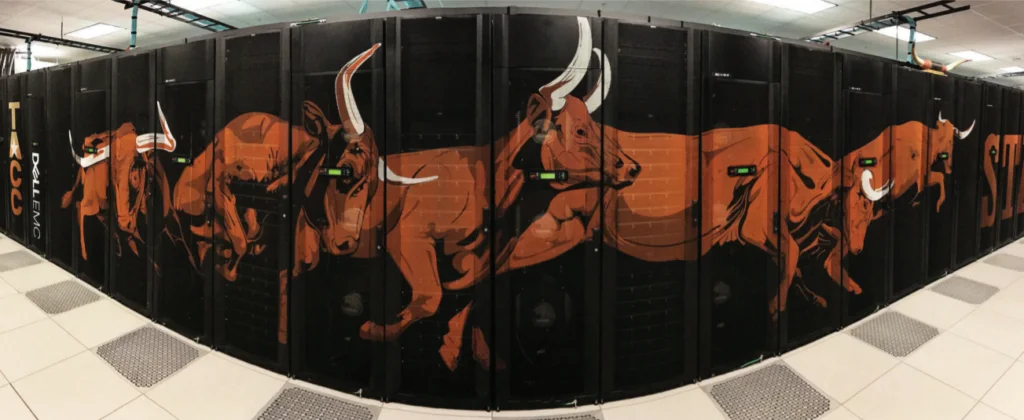

Texas Advanced Computing Center Doubles Computing Capacity with Stampede2

Intel® Omni-Path Architecture, Intel® Xeon Phi® Processor, and Intel® Xeon® Processor Scalable Family Will Help Solve Bigger Problems

Dell EMC

Stampede systems at a glance

The original Stampede was installed in 2013 with 6400 Dell PowerEdge C8220 servers containing Intel® Xeon® Processors and Intel® Xeon Phi™ Coprocessors

New sub-system of Stampede that was a prototype for Stampede2 was installed in May 2016 with 508 Dell PowerEdge servers containing Intel Xeon Phi Processors 7250 and Dell Networking H Series* Fabric based on Intel® Omni-Path Architecture (Intel® OPA

Stampede2 was installed in 2017 with 4200 Dell EMC PowerEdge C6320p servers containing bootable Intel Xeon Phi Processors 7250 and Intel OPA (phase 1), expanding with additional 1736 Dell EMC PowerEdge C6320p servers and Intel® Xeon® Processor Scalable family for 5,940 nodes overall

Early research on Stampede2 includes tumor identification from MRI data (The University of Texas at Austin); simulations supporting the LIGO gravitational wave discovery (Cambridge University); and real-time weather forecasting to direct storm chaser trucks (University of Oklahoma).

Challenge

The Texas Advanced Computing Center (TACC) employs some of the world’s most powerful advanced computing technologies and innovative software solutions to enable researchers to answer complex questions. Every day, researchers turn to TACC resources to help them make discoveries that can change the world. TACC supports researchers working on some of the largest problems in science today, from aerospace, engineering, weather and climate, materials, and cosmology to medicine, biology, public health, and remotely sensed environmental impacts, to name a few.

"TACC exists to provide large-scale computing support to open science," said Dan Stanzione, Executive Director of TACC. "As soon as you can scale beyond what you can do on your laptop, we call that an advanced computing problem, and that’s where we get involved." TACC not only provides computational resources for researchers within The University of Texas system, it also supports NSF research nationwide as part of the Extreme Science and Engineering Discovery Environment (XSEDE). The original Stampede, an Intel based cluster, was installed in 2013 to address many of those needs.

"Speed and capacity are a huge concern," added Stanzione, "for both time to solution and how much science we can support. With the original Stampede, we were receiving five to six times the proposals we could support. We always need more capacity to solve more problems. There are more and more communities we could support."

Solution

To serve the world of open science requires staying on top of technologies with the latest and most advanced computational facilities. The Stampede series of systems at TACC began without an Intel fabric, and it evolved over the last four years to include the Dell* Networking H Series* Fabric based on Intel® Omni-Path Architecture (Intel® OPA). A new subsystem that was a prototype for Stampede2, a 500-node Intel® Xeon Phi™ processor 7250-based cluster was installed in May 2016.

"Before the launch of Intel OPA, we worked with Dell and TACC to let them know what we were doing with high-performance fabrics," stated Brian Dietrich, account executive with Intel. "As we were planning the future, TACC staff and faculty got the chance to see Intel OPA in a production environment on a small cluster at SC15, so they could see the fabric's performance and management." TACC later ordered Intel OPA on a new 508-node sub-system of Stampede that was a prototype for Stampede2.

Stampede2 started installation in Q1 of 2017 with the first phase of 4200 Dell EMC PowerEdge C6320p servers with bootable Intel Xeon Phi Processors 7250 and Intel OPA. "Intel OPA is the interconnect to our storage system and everything else on the system," commented Stanzione.

Stampede2 is a 5,936-node supercomputer with a peak performance of 18 Petaflops that includes 1736 Dell EMC PowerEdge C6420 servers and Intel® Xeon® Processor Scalable family. Stampede2 placed #12 on the November 2017 Top500 list of supercomputers with a peak performance of nearly 13 petaflops.

Results

"With the 500-node cluster, we could see how to get code to run efficiently on the many integrated core architecture and high-bandwidth multi-channel DRAM (MCDRAM) memory of the Intel Xeon Phi Processor," commented Stanzione. "We've done a lot of good science on this system. It let us build experience for Stampede2."

Stampede2 is a very large heterogeneous supercomputer, supporting large-scale science and engineering applications such as NAMD, WRF, and FLASH. Indeed, TACC has measured single-node performance for the sub-system of Stampede that was a prototype for Stampede2 (Stampede2 and the sub-system of Stampede use the same processors) showing speedup from 1.5x for FLASH to over 3x for WRF compared to nodes built on Intel® Xeon® Processor E5 Product Family. "We have some extreme examples in some seismic and earth-related codes where we get 5x or 6x faster per node," stated Stanzione.

But Stampede2 runs less scalable workloads that are increasingly common in research today, including Python*-and MATLAB*-based simulations. "Most of our larger runs," added Stanzione, "that use highly parallel MPI and OpenMP* hybrid codes are going to run very well on the Intel Xeon Phi Processor nodes. Our ability to deliver lower cost cycles in both power and the capital outlay for them will work in their favor. For the newer, less tuned, less scalable codes, the Intel Xeon Processor Scalable family is going to run circles around them, both because of the power management and the higher clock rate."

With Stampede2, TACC and the researchers that use its systems will get the best out of both types of workloads.

Solution Summary

The Stampede series of supercomputers started before Intel OPA was launched, but the fabric was integrated in the two latest deployments—Stampede2 and the sub-system of Stampede that was a prototype for Stampede2. Stampede2 is enabling TACC to support a variety of science and research based on both traditional, highly parallel codes, and workloads based on less parallelized software, like Python and MATLAB. Early work has returned significant insights from researchers around the world.

Where to Get More Information

Learn more about Stampede2

Learn more about Intel Omni-Path Architecture

Learn more about Dell EMC PowerEdge Servers